You Can’t Outsource Accountability

BLUF | How responsible use of GenAI separates activity from intelligent action and moves you up and to the right of the digital efficiency matrix.

You can delegate tasks, but you can’t delegate responsibility. GenAI accelerates work, but accountability must remain with the professional. Intelligent Transformation isn’t about building smarter systems; it’s about ensuring that as our systems get smarter, people remain responsible for what those systems do. Technology can speed the work, but people still own the results.

From Experimentation to Ownership

Across this series, from GenAI at Work to GenAI in Action to Digital Transformation Is Dead, each step revealed a layer of Intelligent Transformation: awareness, application, and philosophy. Now comes the behavioral layer, accountability.

As AI becomes more integrated into our workflows, responsibility doesn’t shrink. It expands to include the systems we design, prompt, and oversee. The professional standard hasn’t changed; only the scale and speed of our impact have.

When Accountability Slips

Over the past year, I’ve had the opportunity to help several organizations adopt generative AI into their mission and business processes. Across these experiences, I’ve seen a familiar pattern: some people begin treating the LLM as a pass-through. The system produces content, and they accept it with little scrutiny, minor editing, light validation, and little ownership.

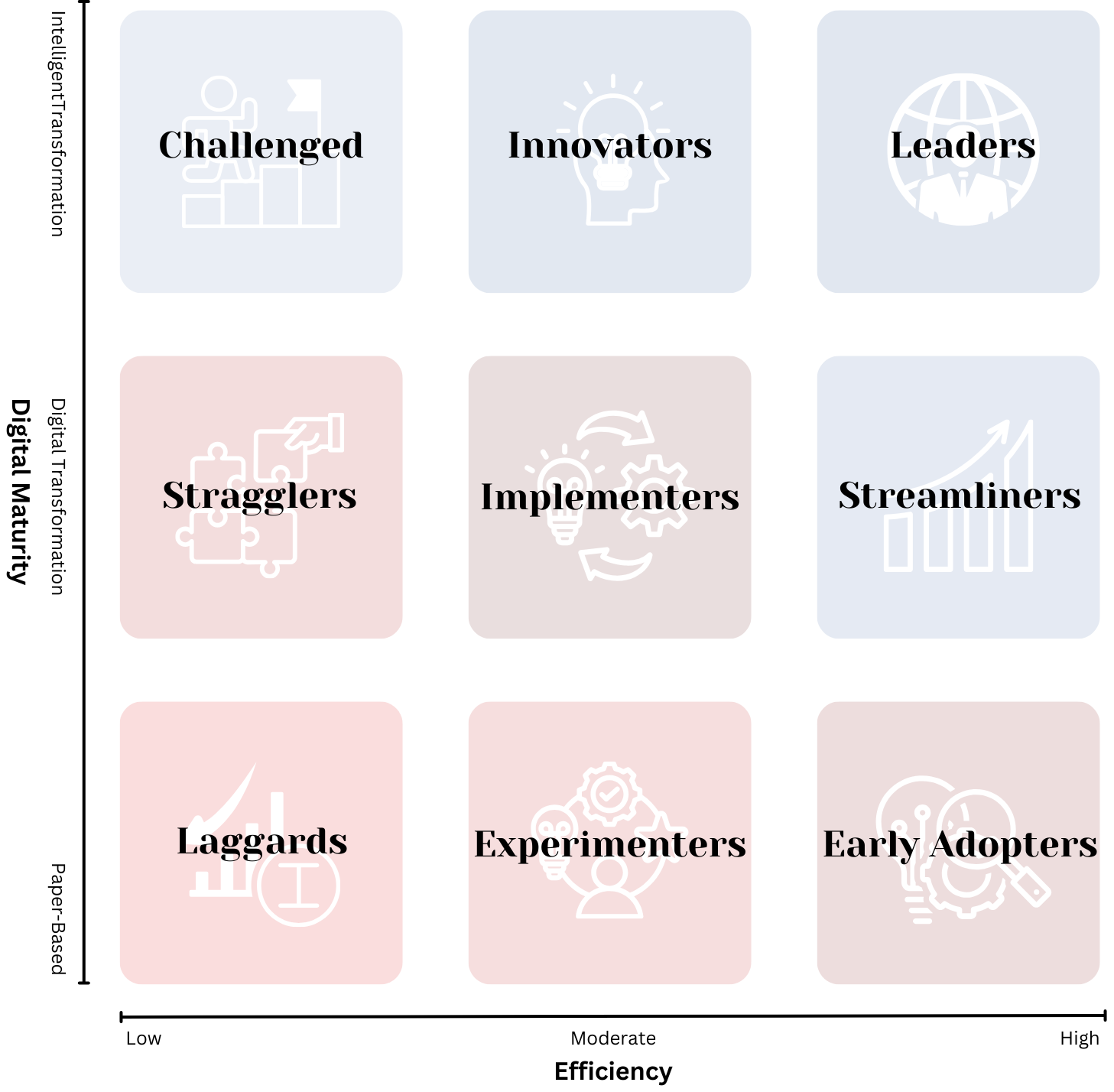

Quality declined. Trust eroded. Teams often fall into what I call the accountability gap, where automation runs ahead of professional judgment. On the Digital Efficiency Matrix, they tend to land in the Challenged quadrant, high digital maturity but low efficiency. Everything is digitized, but much of the work remains manual.

AI didn’t fail us; our relationship with accountability did.

Re-Centering Accountability

As a team, we reset the approach. To better understand what was happening we proactively sat down with each team member to understand their approach. Each team member walked through their process step by step. In the discussion and follow up we found three essentials for them to be successful:

- Validate outputs, don’t just forward them.

- Use AI to augment, not excuse.

- Maintain the same quality standards as before; faster doesn’t mean shallower.

We also coached on prompting, validation, and critical review. The message was clear: GenAI supports your work, but accountability stays with you. The bar doesn’t lower because of automation, it rises.

Within the 5Ps framework, this alignment was clear:

- People: Ownership and integrity

- Process: Verification and iteration

- Policy: Human in/on the loop governance

Accountability in Practice: Human in / on the Loop

Accountability doesn’t mean inserting humans everywhere. It means building systems that know when to bring humans back in.

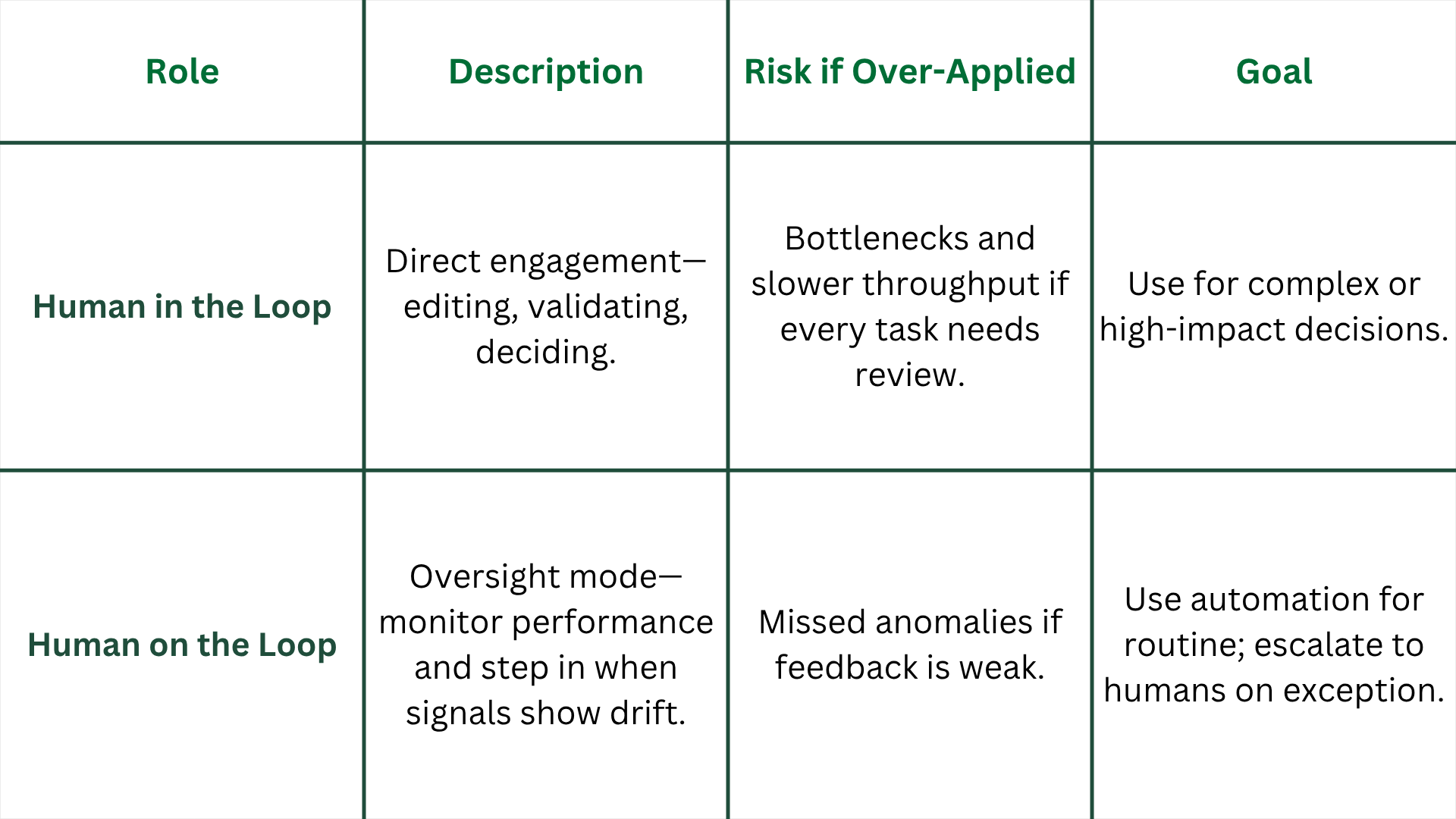

Table of Human IN or ON the loop

Think of it like a control chart. As long as outputs stay within expected bounds, automation runs efficiently. When a data point drifts outside one or two standard deviations, it becomes a signal for review, an invitation for the human to step back in the loop. For a clear, hands-on example of how control limits work in practice, check out Andy Kriebel’s interactive control chart. It illustrates how variation behaves over time and how quickly you can spot signals (red dots) that fall outside acceptable limits, just as we do when monitoring the performance of intelligent systems.

Andy Kriebel's interactive control chart (Screenshot from below interactive chart)

That’s accountability at scale: professionals aren’t micromanaging every output, but they remain responsible for defining thresholds, interpreting anomalies, and acting when things fall out of bounds.

As Mark Graban reminds us in Measures of Success, “The point isn’t creating charts. It’s about wasting less time chasing ‘noise’ in our metrics, which means more time that’s available to work on systemic improvement.” These charts are not just visuals; they’re prompts for focus and intelligent response.

Or, as he also notes, “Charts like these tell us there’s a signal, it doesn’t tell us why (that’s up to us as the users of the charts).” In other words, automation can surface anomalies, but judgment still owns the why (Graban, M. Process Behavior Charts Save Us Time, Help Us Sleep Better at Night, LeanBlog.org, 2017).

Accountability isn’t about doing everything yourself; it’s about building systems that know when to bring you back into the process.

From Dependence to Mastery

Once accountability returned, everything improved. Quality rose above pre-AI levels. Turnaround time dropped from many hours to minutes. The team moved from depending on the model to mastering it.

Within the Digital Efficiency Matrix (below), this specific team’s journey moved from Challenged to Innovators, and now firmly into the Leaders quadrant, where high digital maturity meets high efficiency. This was one of many programs yet to be morphed into the Leader quadrant. For organizations wishing to pursue similar progress, the path forward begins with identifying where each program or office currently sits within the matrix and then focusing efforts on measurable accountability within that specific process area rather than trying to “boil the ocean.”

The Digital Efficiency Matrix (By J Eselgroth)

That’s how organizations scale Intelligent Transformation: not through sweeping change, but by improving individual systems and functions that collectively lift the enterprise.

Leadership lives in the top-right quadrant, where Intelligent Transformation and maximum efficiency converge.

The Leadership Imperative

Accountability is the invisible architecture of trust. You can delegate tasks, but not judgment. Systems don’t replace discernment; they amplify its consequences. Leaders must design for balance, keeping humans in and/or on the loop without reintroducing inefficiency. Intelligent oversight requires clear thresholds, transparent feedback loops, and escalation paths that work.

Delegation of tasks is smart. Delegation of judgment is dangerous.

Closing Reflection

AI can accelerate your work, but only you can validate its worth. True progress isn’t measured by how quickly we automate but by how confidently we can trust the results. Accountability turns automation into augmentation and Intelligent Transformation into trusted performance, because when professionals stay engaged, systems improve, teams mature, and outcomes sustain over time.

This article is Part 4 of the Intelligent Transformation Series.

- Part 1 – GenAI at Work: Most organizations have met GenAI, but few have learned to work with it.

- Part 2 – GenAI in Action: Moving from experimentation to execution with purpose and structure.

- Part 3 – Digital Transformation Is Dead: Why it’s time to shift from doing digital to thinking intelligently.

- Part 4 – You Can’t Outsource Accountability: Maintaining human responsibility as automation scales.